Nvidia has been sitting pretty at the top of the gaming GPU market for the past two years, with sole competitor AMD unable to deliver a viable challenger. In that time, we’ve also seen the prices of graphics cards skyrocket and stocks run out completely, thanks to cryptocurrency mining. With consistently high demand and no competition, Nvidia has not really needed to refresh its product line, and has focused on machine learning, big data, and autonomous cars instead. Gamers have had to make do with the GeForce GTX 10 series, which began rolling out with the GeForce GTX 1080 and 1070 in May 2016. Although powerful, this has been one of the longest product cycles we’ve ever seen in the GPU space, and things have been feeling a bit stale of late.

Several rumoured launch dates came and went this year, and mentions of an upcoming GeForce GTX 11 series started popping up all over the Web, but when the announcement finally happened in late August, what we got was something quite different. Nvidia has dropped its decade-old GeForce GTX naming scheme, debuting three GPUs in a new GeForce RTX series and positioning them above existing products. Codenamed Turing, this first wave of a new generation promises unparalleled realism for gamers, but these GPUs also heavily play into Nvidia’s vision of AI and supercomputing.

We have the middle of the three, the new GeForce RTX 2080, in our lab already. This GPU comes to us in the form of the Zotac Gaming GeForce RTX 2080 Amp graphics card. You can check out our full review of it right here, or read on for a deep dive into the architecture and what’s new.

![]()

Nvidia GeForce RTX series and Turing architecture

While their names suggest that the new GeForce RTX 2070, 2080, and 2080 Ti are replacements for the similarly numbered GeForce GTX 10 series parts, they are all drastically more expensive. Graphics cards built around the new GeForce RTX 2080 Ti cost more than Rs. 1,00,000, while the GeForce RTX 2080 comes in at around Rs. 70,000, and GeForce RTX 2070 cards will start at over Rs. 50,000 when they go on sale in October. Nvidia has said that the GeForce GTX 10 series will continue to live on for a while, which means that you aren’t necessarily getting better performance at each price level, the way most refresh cycles go.

Additionally, Nvidia now has a split pricing strategy for overclocked and non-overclocked graphics cards. The company is also heavily pushing its own Founders Edition cards in retail, and these are not just basic cards with a simple reference-design cooler anymore. They fall into the higher price tier, and with this generation, Founders Edition cards are for the first time high-end, overclocked, quiet, and more expensive than partner cards. They’re also notable for being a little less expensive than partner cards, even in India. Partners including Asus, MSI, Gigabyte, Galax, and Inno3D should introduce lower priced non-overclocked models at some point in the future, according to Nvidia, but we don’t yet know how significant the difference will be. If you’re buying now, be prepared to pay a premium.

Many people think that the switch from GTX to RTX is because of ray tracing, because Nvidia has made a lot of noise about this technology. Actually, Nvidia quietly says that the R is for “rendering”. These new GPUs are still built around clusters of programmable CUDA cores, and they have been improved compared to previous generations, but they are now joined by Tensor cores derived from Nvidia’s AI processors, and also new RT cores designed to handle ray tracing in real-time. Nvidia calls this its hybrid rendering model, combining traditional rasterisation, ray tracing, and AI.

![]()

Nvidia Turing (TU102) GPU die

Photo Credit: Nvidia

By standard measures, Nvidia says that graphics performance has risen from 11Tflops (trillion floating-point operations per second) to 14Tflops using just the CUDA hardware, but that goes up to 114Tflops thanks to the Tensor cores. Nvidia also wants us to describe performance using a new unit, rays, to quantify ray tracing bandwidth. Turing is said to achieve up to 10 Gigarays per second. The highest implementation of Turing, codenamed TU102, which powers the GeForce RTX 2080 Ti, has 18.9 billion transistors and is one of most complex chips ever shipped.

Compared to the Pascal architecture behind the GeForce GTX 10 series, Turing offers developers several tools to incorporate into games. Neural Services are AI-enabled effects and enhancements such as resolution enhancement, the ability to smoothen video, and fill in missing information or replace unwanted parts of frames without disruption. Mesh shading and variable rate shading allow for different parts of scenes to have different amounts of power expended on them.

Turing also brings GDDR6 graphics memory to consumers for the first time. This is an interesting choice, showing that Nvidia considers HBM (high-bandwidth 3D stacked memory) such as we’ve seen used with AMD’s Vega GPUs, still too complex or expensive for widespread use. GDDR6 improves bandwidth and reduces power consumption compared to GDDR5X.

![]()

Nvidia GeForce RTX 2080 (Turing TU104) block diagram

Photo Credit: Nvidia

DLSS (Deep Learning Supersampling)

A deep dive into Nvidia’s documentation reveals that DLSS actually renders frames at a lower resolution and then upsamples them using machine learning to determine what surfaces and edges need to be tweaked to look better. Because we’re dealing with motion and not stills, there’s some leeway in terms of quality. The company has stated that developers can work with it to develop individual neural networks for each game using Nvidia’s massively powerful DGX AI computers at no charge. By feeding thousands of frames into a neural network and teaching it what is ideal in terms of quality, a model can be trained to sharpen new frames you generate them by playing a game.

The resulting DLSS “profiles” are claimed to be just a few MB in size, and will be distributed as part of Nvidia’s drivers or GeForce Experience software. What’s more, DLSS transformation is performed on a GeForce RTX GPU’s tensor cores, not the CUDA cores. This removes the overhead that other antialiasing methods might impose, freeing their full capacity for actual gameplay rendering.

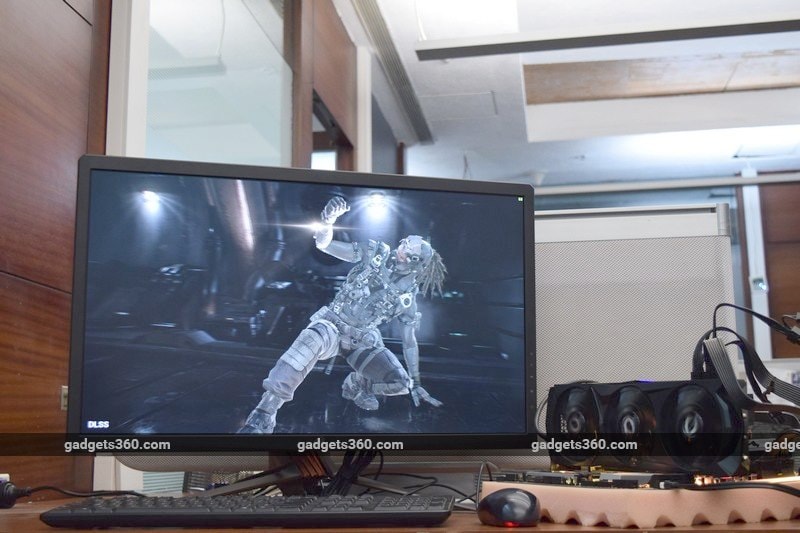

In lieu of any actual released games, Nvidia sent us a copy of the Infiltrator demo that it showed off at its launch event. We were able to run it with and without DLSS enabled. Without it, frame rates averaged between 40 and 50fps as measured by the OCAT analysis tool, and there were one or two brief stutters. With DLSS enabled, there was a clear improvement in smoothness and we observed frame rates of 55-65fps. However, there was some artifacting in a scene with complex transparency and motion effects.

Ultimately, users are not getting a true 4K visual if they use DLSS at 4K (and we hope that game developers use accurate labelling to inform gamers of this) but the tradeoff is smoothness, and Nvidia bets that users will prefer DLSS when given the chance to see it working. We will have to wait for actual playable games to be released to see whether or not this pans out, but studios are already on board. As of now, 25 upcoming titles including Darksiders III, Final Fantasy XV, Hitman 2, Playerunknown’s Battlegrounds, and Shadow of the Tomb Raider, have already been confirmed to support it.

![]()

Reflections rendered using ray tracing in Battlefield V (pre-release)

Photo Credit: Nvidia

Ray Tracing

At its essence, ray tracing means that individual beams of light are computed as they are emitted from a source, reflect off and refract through various surfaces, interact with other rays, and finally hit the eye of the viewer. As opposed to rasterisation, which involves drawing and mapping millions of 3D polygons per 2D frame and then giving them colour and texture, ray tracing follows photons as they bounce around a generated environment. This way, effects like depth of field, shadows, reflections, and even motion are generated for each individual ray, and when combined, they can be as realistic as a photo. Ray tracing can also account for light sources and reflective surfaces outside the player’s current field of view.

Ray tracing has been used for cinematic effects and pre-rendered video for years now, but it has been too computationally intense to pull off in real-time, which is how game graphics are generated. Nvidia claims that Turing is the tipping point for when real-time ray tracing can be achieved on a single consumer GPU. Beyond gaming, there are major applications in the fields of architecture, engineering, product or industrial design, lighting design, and many more.

While that sounds great, we aren’t ready to throw rasterization out entirely. Of the 11 games that have so far been announced to support ray tracing, Battlefield V uses it mainly for reflections, and Shadow of the Tomb Raider is using it for realistic shadows on top of rasterised scenes. You should also keep in mind that none of these games can support ray tracing immediately — those that have released already or are close to releasing will be updated with patches at some point in the future. Nvidia is leveraging Windows ML (Microsoft’s machine learning platform API) and DirectX Ray Tracing, an extension to DirectX 12, which will only be released with the upcoming October 2018 update.

Just like for DLSS, Nvidia sent us a one-off demo to show how its ray tracing tech looks. This is the same sequence shown during the GeForce RTX launch event, featuring two stormtroopers and Captain Phasma from Star Wars interacting in an elevator. The demo shows off incredibly detailed reflections on the curved surfaces of their suits and helmets. While the scene is rasterised, ray tracing is used for area lighting, ambient occlusion, reflections, and shadows. Nvidia says that this demo also uses DLSS, and it runs up to 6X faster on Turing compared to an equivalent Pascal GPU.

![]()

Shadows rendered using current-day shadow mapping (top) and ray tracing (bottom)

Photo Credit: Nvidia

Should you buy an Nvidia GeForce RTX graphics card right now?

If you’ve been waiting for the right time to buy the latest and greatest PC hardware, there’s finally something out there that will satisfy that itch. To use any of them fully, though, you’ll need a flagship-class CPU from either Intel or AMD as well as plenty of RAM, a large SSD, heavy-duty power supply, and of course a 4K monitor (preferably with a high refresh rate and G-Sync). All told, that’s a huge amount of money, and will severely limit Nvidia’s audience. For those with no budget constraints at all, the GeForce RTX series could make sense as an upgrade, either now or in a few months’ time. If you do buy one, you’ll be set for the next several years’ worth of top-tier games. Do check out our review of the GeForce RTX 2080, in the form of the Zotac Gaming GeForce RTX 2080 Amp.

Nvidia calls this its biggest architectural leap in over a decade. Unfortunately there are no mid-level GeForce RTX GPUs yet, so the vast majority of gamers who can’t drop over Rs. 50,000 on a graphics card upgrade are out of luck. Mainstream gamers should keep looking out for price drops on older GeForce GTX 10 series cards and pick one up if it seems like a good deal, instead of worrying about missing out. Thankfully, prices are approaching sensible levels and you can even buy Nvidia’s Founders’ Editions for around their original launch MRPs right now. There’s no telling when Nvidia’s mid-range and entry-level gaming products will be refreshed, but in any case, we’d wait to see how much they improve performance and how well they support DLSS or ray tracing before advising anyone to buy them. We’re also extremely curious to see how the company will set pricing and fill the gap it has created.